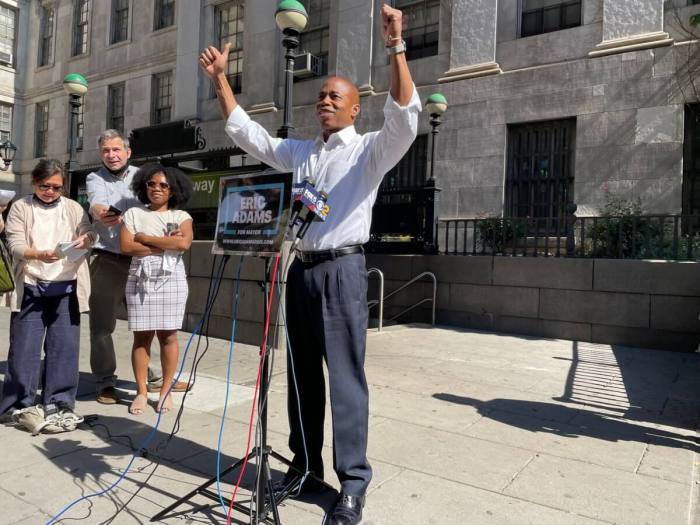

As New York City Mayor Eric Adams continues to fine-tune his crime prevention strategies in response to a precipitous rise in violence across the boroughs, one potential avenue the centrist Democrat is exploring is the expansion of facial recognition as a key crime-curbing tool.

Crime in the city has escalated by nearly 40%, and the Bronx saw a 22.6% rise in overall crime — including a 150% increase in shootings — compared to January 2021.

Shootings up 150% in the Bronx; Tremont’s 48th Precinct sees 89% crime spike: NYPD

Adams believes that modern technology can be used accurately and ethically to identify suspects and violent offenders throughout the city, and is expected to expand its usage in the city’s policing efforts, according to unspecified details in his 15-page Blueprint to End Gun Violence plan.

“If you’re on Facebook, Instagram, Twitter — no matter what, they can see and identify who you are without violating the rights of people,” Adams said late last month as he pushed a new plan to end gun violence. “It’s going to be used for investigatory purposes.”

But the use of facial recognition technology has long been controversial and the subject of various civil rights and constitutional lawsuits. And while Adams looks to steer his crime prevention and policing plan through facial recognition and gun detection technology, other cities — liberal-leaning San Francisco and Midwest conservative Missouri — have scrutinized its usage as anti-democratic at worst, and an invasion of privacy at best.

The “Stop Secret Surveillance” ordinance passed 8-1 in a May 2019 board of supervisors vote enforcing an all-out ban on San Francisco city agencies’ use of facial surveillance, which tech companies such as Amazon and Microsoft currently sell to various U.S. government agencies.

“The propensity for facial recognition technology to endanger civil rights and civil liberties substantially outweighs its purported benefits,” an excerpt from the San Francisco ordinance reads. “…and the technology will exacerbate racial injustice and threaten our ability to live free of continuous government monitoring.”

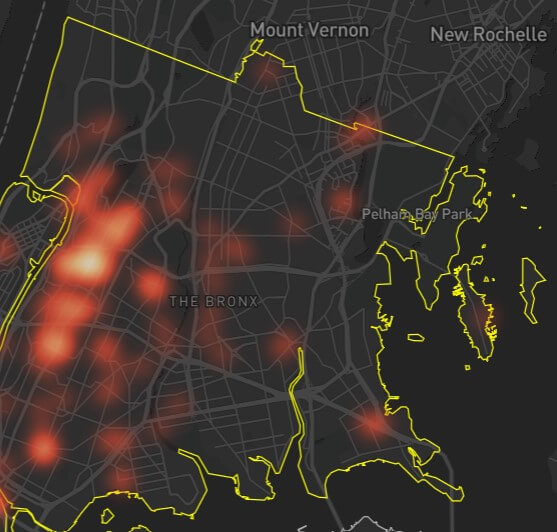

According to Amnesty International, an international human rights organization, the NYPD has more than 15,280 surveillance cameras at intersections across the city, with 3,470 sited in the Bronx.

“You are never anonymous,” said Matt Mahmoudi, Artificial Intelligence & Human Rights Researcher at Amnesty International. “Whether you’re attending a protest, walking to a particular neighborhood, or even just grocery shopping — your face can be tracked by facial recognition technology using imagery from thousands of camera points across New York.”

According to the Pew Research Center, a think tank, 75% of adults in the U.S. either know very little or nothing about facial recognition technology. Privacy concerns aside, various civil rights groups call the use of facial recognition a predatory practice that racially disenfranchises people of color.

In the Bronx, the most heavily-surveilled hot spots — areas where there are more than three NYPD surveillance cameras present at streetlights/traffic signals/poles in a single neighborhood — are the Grand Concourse, Fordham and Tremont sections, which have some of the highest Black and brown residents in the borough.

“Mayor Adams is returning to the same failed playbook of centering police in public safety policies,” said Scott Roberts, senior director of Criminal Justice and Democracy Campaigns at online racial justice organization Color Of Change. “This will only lead to the same discriminatory, violent tactics that disproportionately target Black communities. Communities in New York City and across the country are rightly demanding that elected officials expand our public safety options, invest in education, jobs, affordable housing and mental health services.”

In a October report published by Color Of Change, they found that surveillance and facial recognition technologies not only demonstrated racial bias, but led to wrongful arrests of Black men in New Jersey, Michigan, the aforementioned San Francisco, Oakland, Boston and Jackson, Mississippi.

California placed a temporary moratorium on its use by law enforcement while Massachusetts instituted a ban, and cities in states from Oregon to Maine have banned facial recognition due to concerns about bias, with error rates of up to 35% for women of color, the report reads.

Findings from a 2019 study by the National Institute of Standards and Technology showed that facial recognition programs across the country tended to be less accurate when analyzing Black or Asian individuals.

“The issue is that we know facial recognition has shown to be highly inaccurate when identifying Black and brown folks and it has shown to lead to false arrests, false identification and we’ve seen in places like Detroit and New York that there have been false identifications using this technology,” said Brown.

The NYPD has been using facial recognition technology made by DataWorks Plus since 2011 — capturing crime scene footage by cameras at robberies, burglaries, assaults, shootings and other crimes — and the department credits the tool for solving murders, rapes and missing persons cases. Despite the department’s insistence that facial recognition is used “in a narrow capacity,” their usage of the technology has led to at least six lawsuits.

But Adams, a retired police captain, wants to take the technology a few steps further.

“We will also move forward on using the latest in technology to identify problems, follow up on leads and collect evidence — from facial recognition technology to new tools that can spot those carrying weapons, we will use every available method to keep our people safe,” Adams said at a Jan. 24 press briefing.

Activists say cities still adopt facial recognition technology in their policing despite citizen backlash because it’s widely supported among police foundations, private non-profit organizations that raise money for police departments and related activities. New York City started the first police foundation in 1971, and it’s estimated that 76% of funding from police foundations is allocated toward “technology and equipment” including security equipment such as cameras, lighting and license plate readers.

Roberts, of the Color of Change organization, said that funding would better be used if diverted back into the communities that have been most heavily-policed or seen as “high-crime” areas. Last year, advocates had been pushing for the passage of Daniel’s Law on the federal level, a measure that would mandate mental health professionals, not police, respond to crisis calls statewide.

“I believe public safety comes from stability in communities — whether that’s access to good jobs, mental health services, education — we need to think outside the box when it comes how we approach crime and choosing how we invest in our communities,” said Roberts. “Our concern is that we’re using so much resources that are not preventing crime, but also perpetuate the racial bias in the (criminal justice) system.”

Recently, Democratic lawmakers in both the Senate and House called on federal agencies to curb usage of facial recognition, requesting the departments of Justice, Defense, Homeland Security and the Interior to stop using Clearview AI’s facial recognition system for domestic law enforcement.

“Clearview AI’s technology could eliminate public anonymity in the United States,” the lawmakers wrote to the agencies in their letters condemning the software. They said that, combined with the facial recognition system, the database of billions of photos Clearview scraped from social media platforms “is capable of fundamentally dismantling Americans’ expectation that they can move, assemble or simply appear in public without being identified.”

Reach Robbie Sequeira at rsequeira@schnepsmedia.com or (718) 260-4599. For more coverage, follow us on Twitter, Facebook and Instagram @bronxtimes.