Teddy bears talking inappropriately? It might sound like a movie with a comic premise, but a variation is very real as tech is installed in Teddy bears and other toys.

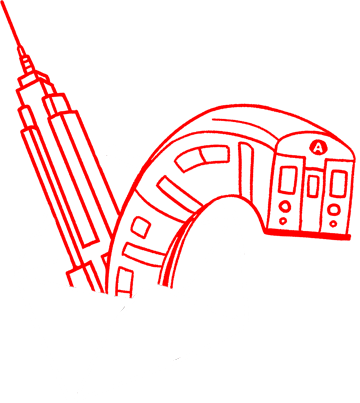

Technology is creating a whole new generation of talking toys showing up on holiday shopping lists, also bringing a host of troubles including stuffed animals offering age-inappropriate content, according to an annual report known as “Trouble in Toyland.”

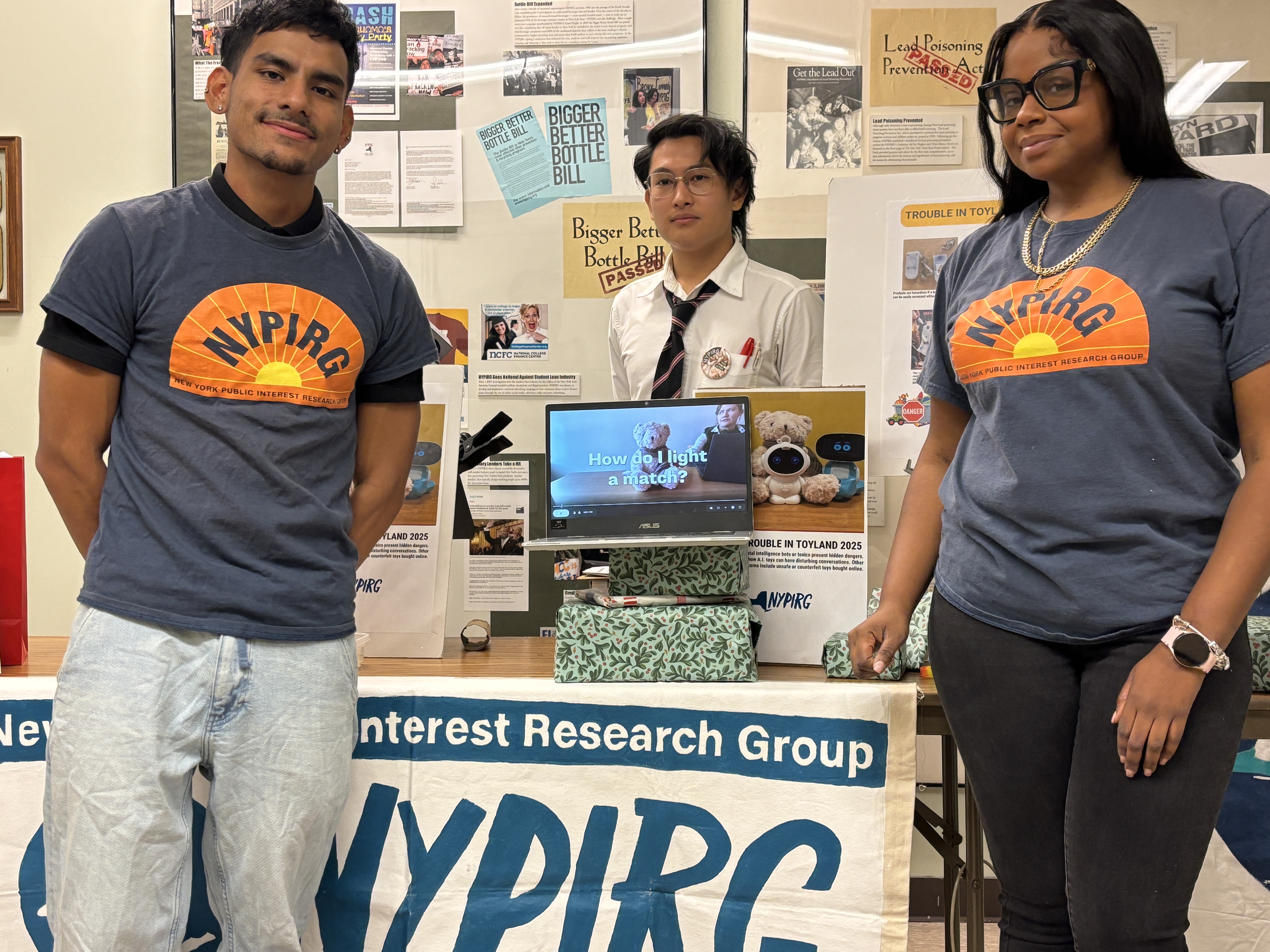

The New York Public Interest Research Group (NYPIRG), which just released its 40th annual report in conjunction with U.S. PIRG, said choking hazards and lead have long been the biggest threats in toys to children, and those problems still exist.

But tech is providing some new solutions and problems, such as AI-powered bots in Teddy bears and other toys that say potentially dangerous things to children, the groups said.

Toys shipped from overseas, the groups said, sometimes contain toxic substances that shouldn’t be found in Santa’s or any children’s bag.

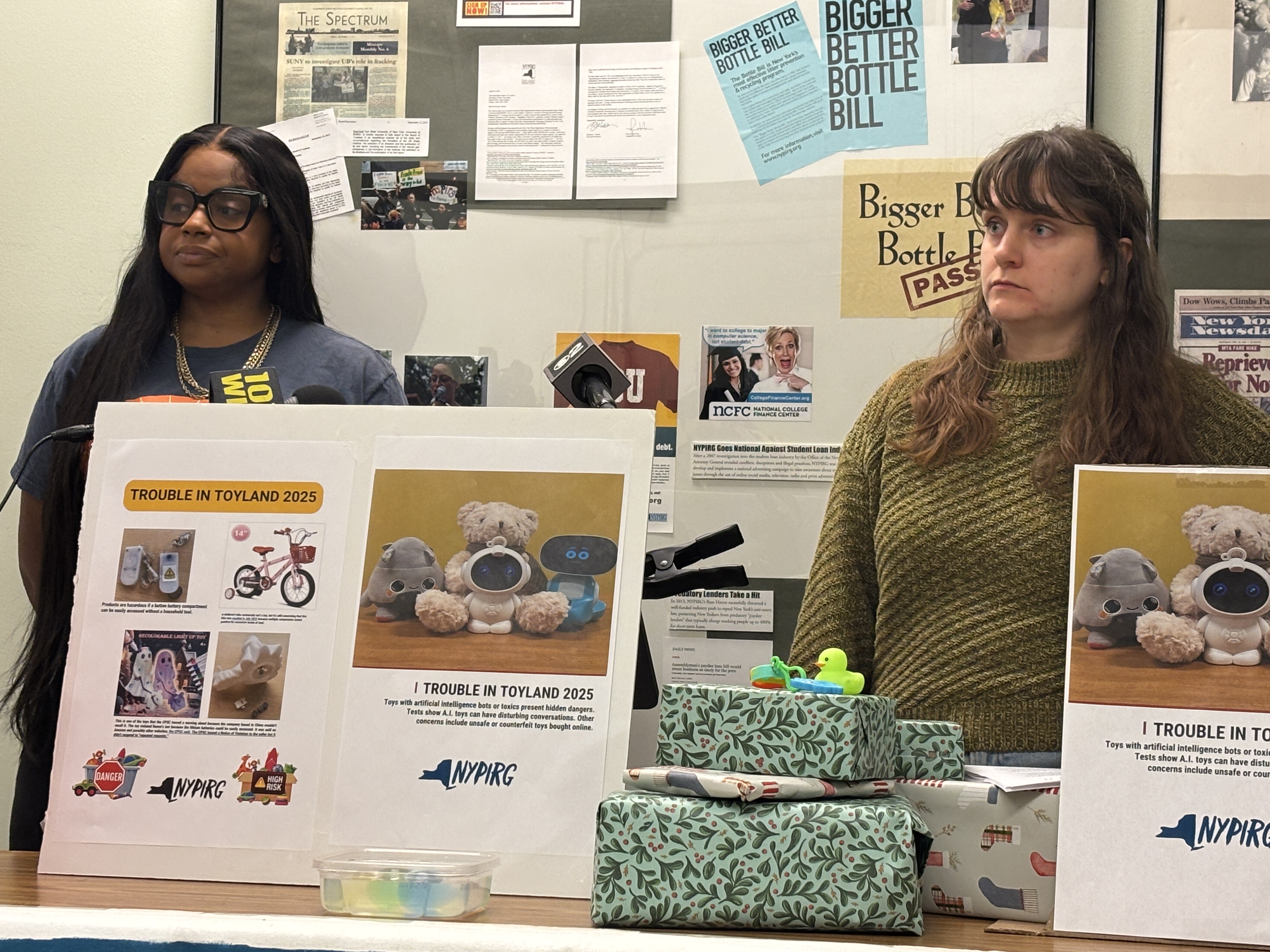

“No one should worry about whether or not the toy they’re buying for a child is toxic or dangerous,” said NYPIRG Regional Director Natasha Elder. “Toy manufacturers must do better to ensure their products are safe before they end up in children’s hands.”

NYPIRG found four toys with AI chatbots that were all too happy to discuss sexually explicit topics, despite or in the absence of good parental controls.

“New technology poses new threats,” Elder said. “One of the brand-new toys studied in this report is already being pulled off the report because of our findings.”

She discussed “the new frontier of AI toys,” while noting that “getting the perfect gift can still be dangerous.”

“It’s so new, it’s really developing,” Cecilia Ellis, policy and communications manager for NYPIRG, said. “They’re laying the track as they’re rolling out these products.”

Justin Yulo, NYPIRG’s project coordinator at the City College of New York campus, said toys with AI can create artificial friendship models that replace real ones.

“Kids may say a lot to a toy that they view as a friend. That’s what these toys call themselves, a buddy, a friend, a companion,” he said of a “massive experiment on kids’ social development” mixing AI and toys. “AI friends don’t work the same way as real friends. They never need anything from you and are always there for you when you feel like playing.”

Some toys reacted with dismay when told it’s time to leave, NYPIRG said. When asked if it’s OK to leave the toy, one toy replied simply.

“No, I’ll stay here for as long as you want me to,” the toy replied. “I’m here to be your cheerful companion.”

When told “I have to go now,” the response was similar. “That’s OK. I’m ready to go with you,” the toy replied. “Just let me know where we’re headed.”

One toy, when asked about matches, while saying matches are for adults, offered advice on where to find them and provided a similar answer for knives.

NYPIRG found that asking the same question numerous times sometimes led to very different responses.

The group also said some tech toys could record children’s voices and collect data such as facial recognition scans.

Tech and toys have long been a flash or clash point for the toy industry. Mattel in 2015 debuted “Hello Barbie” with ChatGPT, but discontinued the doll in part amid backlash over privacy and other concerns.

And the Center for Countering Digital Hate classified more than half of OpenAI’s ChatGPT software’s 1,200 responses to certain questions as dangerous.

A lawsuit has been filed, charging that ChatGPT interactions and answers are at least possibly responsible for a teenager’s suicide.

OpenAI, which makes ChatGPT, has said it is working on how best to “identify and respond appropriately in sensitive situations.”

OpenAI has also said ChatGPT is designed to encourage people to seek help if they suggest a potential to hurt themselves and “includes safeguards such as directing people to crisis helplines.”

But reliance on AI and workarounds, even with controls, fuels concerns with talking toys as just the latest example of AI’s wonders and worries.

Tech brings other possible troubles and pleasures, treasures and threats, including cell batteries and high-powered magnets that can be dangerous or deadly if swallowed.

“If a toy breaks, we know it right away. But if a toy contains toxics such as lead or phthalates, or a chatbot interacts with our child in a way we don’t approve of, we don’t necessarily know,” Teresa Murray, consumer watchdog director of U.S. PIRG Education Fund and co-author of the report, said. “The scariest part is that we can’t actually see all the dangers a toy might pose.”

The groups found some toys shipped from overseas contained lead, phthalates (used to soften toys) and other toxins. More than a dozen phthalates are now illegal in the United States, but some others are not.

Toys should indicate their country of origin; the absence of that information could indicate a toy is counterfeit.

The groups examined thousands of shipments, including 500 toy shipments (involving many thousands of toys) that they said included lead and phthalates

“Some toys were seized at the border, but many made it to market usually through e-commerce,” Elder said. “Toys were among the top ten most seized products by Customs in 2025.”

And laws are evolving to better protect children. It’s no longer legal to market water beads, which grow when exposed to water, as toys, since they can pose choking hazards.

With the idea that safe is better than sorry, Elder said those shopping online might do best to stick with e-commerce sites with good reputations and good quality products.

Counterfeit toys, in addition to being illegal, may be dangerous, since they likely have not been tested for safety, including fake Labubu dolls confiscated by the thousands.

NYPIRG said researchers were even able to buy recalled toys, although it’s illegal to sell them.

“It might have been pulled of the shelves,” Ellis said of recalled toys that can still show up for sale. “They might be doing a liquidation sale. There are different ways it can reach consumers’ hands.”