Senior Judge William Alsup of the Northern District of California recently issued a highly anticipated summary judgment opinion in Bartz v. Anthropic PBC. This is one of the first decisions of many to come dealing with infringement claims by copyright holders against generative AI companies like Anthropic, OpenAI, and Perplexity.

In Bartz, the plaintiffs are authors whose books were used to train Anthropic’s popular Claude LLMs. The Court’s decision drew a sharp distinction between two issues — whether using copyrighted books to train a generative AI system constituted fair use, and the legality of how those books were obtained and stored.

The Court granted summary judgment for Anthropic on the fair use question, finding that training Claude on copyrighted books was permissible under 17 U.S.C. § 107. However, it denied Anthropic’s motion for summary judgment with regard to Anthropic’s practice of acquiring and retaining a large, centralized internal library of pirated books.

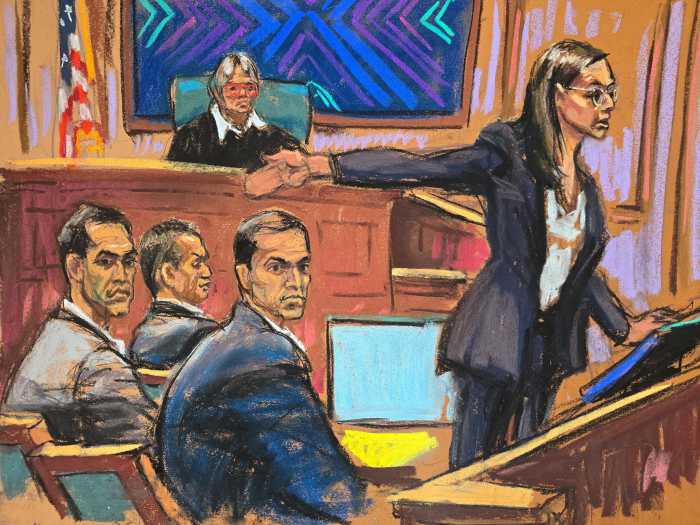

While this opinion is not binding on other courts, the decision may be influential, particularly in the high-profile lawsuits consolidated in the Southern District of New York. That multidistrict litigation (MDL) includes claims by authors, newspapers and other content creators against OpenAI. Judge Alsup’s decision also offers a useful early framework on how judges, regulators and companies could approach copyright compliance in this rapidly developing area.

Training LLMs on Books Is Protected by Fair Use

The Court ruled that Anthropic’s use of books to train its LLMs qualified as fair use. The analysis focused on the first and fourth statutory fair use factors: the purpose and character of the use, and the effect on the market for the original works.

When evaluating the purposes and character of the use, courts often look at whether the use is “transformative.” Judge Alsop did not mince words or consider this a close call, writing the “‘purpose and character of using works to train LLMs was transformative – spectacularly so.” Elsewhere, the Court described the training process as “quintessentially transformative.” “Like any reader aspiring to be a writer, Anthropic’s LLMs trained upon works not to race ahead and replicate or supplant them – but to turn a hard corner and create something different.” The Court was careful to note that Claude does not output full books or lengthy excerpts, which would have resulted in a different case.

As to market harm, the Court rejected Anthropic’s argument that training Claude on plaintiffs’ books displaced, or will displace, a licensing market of copyrighted materials being used for AI training. This is an interesting aspect of the decision because there is a market for the licensing of copyrighted materials for purposes of training LLMs. The Court noted, however, that “such a market for that use is not one the Copyright Act entitles Authors to exploit.”

Significantly, Judge Alsup assumed that Claude “memorized” parts of the books in training but held that this did not, in itself, negate fair use. He also distinguished between the training process and specific outputs, making clear his decision did not address whether Claude might, in specific instances, output infringing content.

This portion of the decision is massive and could set the groundwork for further judicial approval of the method used to train LLMs and power tools like ChatGPT and Claude.

Retention of Pirated Works as a “Library” Was Not Protected by Fair Use

While the Court was receptive to fair use in the context of training, it flatly rejected Anthropic’s defense of its acquisition and long-term storage of over seven million pirated books. These books were downloaded from shadow library sites like Pirate Library Mirror and Library Genesis and stored in a central repository that Anthropic employees could access for model training and internal research. Notably, the Court relied in part on an internal Anthropic email in which an employee was tasked with obtaining “all the books in the world” while avoiding as much “legal/practice/business slog” as possible.

The Court held this retention was not fair use. Judge Alsup emphasized the books were not merely downloaded temporarily for specific training runs, but kept long-term, indexed, and made broadly available within the company. “This order doubts that any accused infringer could ever meet its burden of explaining why downloading source copies from pirate sites that it could have purchased or otherwise accessed lawfully was itself reasonably necessary to any subsequent fair use,” such as training an LLM. He further noted that prior case law demonstrates “why Anthropic is wrong to suppose that so long as you create an exciting end product, every ‘back-end step, invisible to the public,’ is excused.” He also distinguished the Google Books ruling on the grounds the books that were scanned by Google in that case were lawfully acquired. On the other hand, the Court also found that purchasing millions of print copies of books, scanning them and destroying the print copies did constitute fair use.

The decision clearly signals copyright compliance in the AI context must include not just how works are used, but how they are acquired and handled internally. Further, the decision has practical consequences for companies building or using training datasets. Even if the end use of the material is transformative and noncommercial, the Court found that acquiring copyrighted works through illicit means — and storing them for future reference — is a standalone violation of the Copyright Act.

How This May Influence Other AI Copyright Cases

Judge Alsup’s decision provides a framework that may be persuasive: he emphasizes the importance of the transformative nature of training while drawing a bright line against pirated acquisition. Still, it is premature to assume courts around the country will follow suit. In the Southern District of New York, MDL judges may view the issues differently, particularly where plaintiffs can point to more specific evidence of the licensing of copyrighted materials.

Key Compliance Takeaways

The Bartz ruling underscores the importance of proactive copyright risk management. Most notably, the decision makes clear that how you acquire and handle training materials is just as important as how your models use them.

This places a premium on cleaning training data. Companies should consider carefully auditing the sources of their training corpora, removing unauthorized works, documenting material provenance, and — where possible — seeking licenses or relying on compliant data providers. Vendor contracts with GenAI providers should also be reviewed to ensure datasets provided for AI training do not contain unauthorized copyrighted content, and that indemnities cover potential copyright exposure.

Brendan Palfreyman is a partner at Harris Beach Murtha who writes and speaks frequently on issues of Artificial Intelligence.

Also Read : https://www.amny.com/politics/partner-ryan-carson-paladino-online-attacks/